Streamline Seismic Interpretation with Advanced Well-Tie

XGradient Boost, a cutting-edge decision tree, ensemble learning method has been added to the Deep QI license.

This highly optimized implementation focuses on speed, flexibility, and model performance. Automated QCs are provided to best convey ML workflow success.

Like other regression methods in the Deep QI module (SVM, DNN, RF), the XGB Regression function can be employed to predict a single output target (e.g., Porosity, Saturation, mineral volume, etc) given multiple input features (e.g. Vp, Vs, Rho logs).

XGB is built using supervised machine-learning (ML), decision trees, ensemble learning, and gradient boosting techniques. It is a highly optimized implementation of gradient boosting that focuses on speed, flexibility, and model performance.

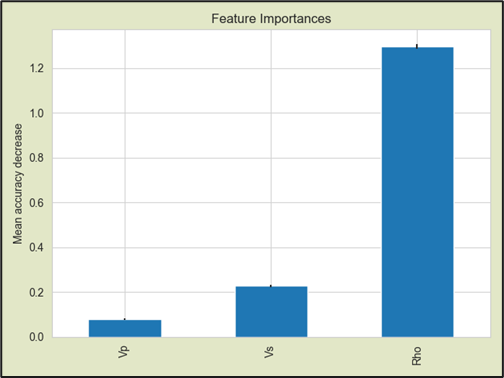

Based on the ensemble of decision trees, XGB allows for efficient estimation of feature importances without having to re-train the model. Computed feature importance shows the power of each input log-type in prediction of the target variable and, thus, facilitate the interpretation of results and provide user with useful insights.

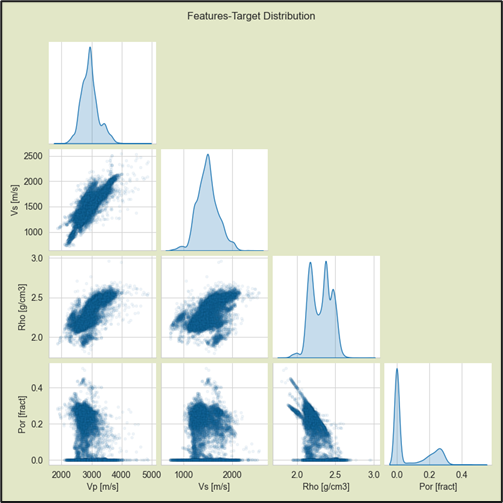

A Features-Target Distribution Matrix Pairplot is used to understand the correlation between different features and different data clusters in the crossplot space. It can also be used to visually recognize outliers:

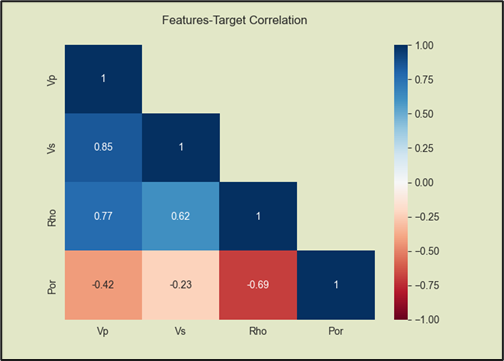

Features-Target Correlation Heatmap is used to visualize the strength and sign of all pairwise correlations between the input data variables – the target with each feature as well as correlation between the features. This can provide user with useful insights into the importance of each input feature in the prediction of the target as well as inter-feature dependence.

Feature Importances

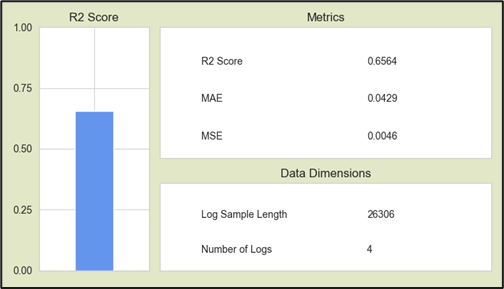

And overall uncertainty metrics for documentation.

Train with well data, deploy at the well and in 3D. Automated parameter optimization, feedback and metrics provides confidence and understanding of the prediction for the user.

For more details, please explore the RokDoc Platform Add-ons page on our website!

Mar 9, 2023 1:01:34 AM

%20(1200%20%C3%97%20600%20px).png?width=500&height=500&name=Untitled%20(LinkedIn%20Post)%20(1200%20%C3%97%20600%20px).png)

-1.png?width=500&height=500&name=MicrosoftTeams-image%20(11)-1.png)